hyperconverged appliance (HCI appliance)

What is a hyperconverged appliance (HCI appliance)?

A hyperconverged appliance (HCI appliance) is a hardware device that provides multiple data center management technologies within a single box. Hyperconverged systems are characterized by a software-centric architecture that tightly integrates compute, storage, networking and virtualization resources and other technologies. At one time, these systems were based on the use of commodity hardware, but now premium appliances have become more common.

Typically, a hyperconverged appliance is sold as an integrated bundle, even if it contains products from different vendors, such as a hardware vendor and a hypervisor vendor. The vendor certifies that all the appliance's various hardware and software components work with one another and act as a single point of contact for technical support.

Hyperconvergence developed out of the converged infrastructure model, which combined disparate data center components into an appliance form factor to minimize compatibility issues and simplify the management of servers, storage systems and network devices, while also reducing costs for cabling, cooling, power and floor space.

How does hyperconvergence work?

Hyperconvergence pools and abstracts the underlying hardware resources using software-defined technologies, allowing for flexible management and resource allocation. This enables independent and dynamic scaling of computing, storage and network resources.

Hyperconverged appliances almost always use server virtualization and storage virtualization. As such, nearly all hyperconverged appliances include a hypervisor, a program that enables multiple operating systems to share a single hardware host.

A hyperconverged appliance infrastructure also consists of industry-standard x86 servers and software-designed storage along with a series of modular, standardized nodes. Initially, each node was designed to be entirely self-contained and included compute, network and storage hardware. These nodes are typically installed in a special-purpose chassis. If an organization needs to scale its hyperconverged deployment, it simply installs additional nodes into the chassis. However, some vendors now use external storage arrays, which let organizations scale up the compute and storage resources independently of one another.

Common hyperconverged appliance features

Numerous vendors offer hyperconverged appliances with varying feature sets. For instance, a hyperconverged appliance that's specifically designed to handle high-performance workloads likely includes all-flash storage or non-volatile memory express storage, whereas a hyperconverged appliance that's acting as a virtual desktop host might be equipped with a less expensive storage alternative.

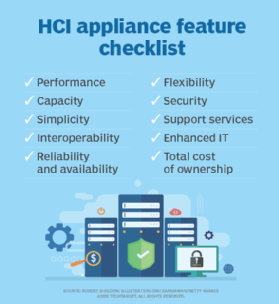

Some of the more important features to look for in a hyperconverged appliance include the following:

- Flash storage arrays.

- Out-of-band management capabilities.

- Support for the hypervisor of choice.

- Enough memory to run the desired workload.

- Vendor support.

- Scalability to accommodate workload growth.

- Management software.

- High availability.

- Compatibility with critical applications.

In addition, it can be helpful to check with hardware vendors to see if nodes are upgradable. HCI deployments are based on the idea that each node contains identical hardware. As such, vendors initially made it impossible to upgrade a node's hardware. However, many vendors now offer nodes with upgradeable components.

Hyperconverged appliance use cases

Some organizations inevitably adopt HCI appliances to use as general-purpose computing platforms. Although some hyperconverged appliances can be as costly as the enterprise-grade hardware found in traditional data centers, several vendors offer products that are based on commodity hardware. Such appliances can provide a cost-effective alternative to traditional hardware.

Although general-purpose hyperconverged appliances do exist, there are some specific use cases for which they're especially well suited. Some of the most popular use cases include the following:

- Cloud migrations. The majority of HCI appliances and infrastructure options offer intuitive administrative tools that enable easy resource migration from on premises to public clouds. In addition, contemporary hyperconverged appliances offer integrated application programming interfaces that make cloud migrations easier to handle.

- Hybrid cloud. Establishing a hybrid cloud environment for businesses is challenging due to the complexity of managing various products and components. But because of its design and user-friendly management, hyperconverged infrastructure has emerged as a popular choice for hybrid cloud deployments, enabling faster provisioning without the need for specialized skills.

- Backup and disaster recovery (DR). The centralized management capabilities of hyperconverged infrastructures facilitate effortless duplication of data and virtual machines (VMs) within and across clusters. Organizations can use this capacity to consistently support their operations and create a reliable DR system.

- Virtual desktop infrastructure (VDI). Organizations can create VDI instances that can include computation, software-defined networking and software-defined storage.

- Edge computing. Deploying standalone servers, storage and networking products at edge locations presents several challenges, including scalability limitations and complex management. The inherent design of HCI can help eliminate these challenges for edge computing.

- Remote office/branch office (ROBO) computing. Centralized management is essential when managing a ROBO because it enhances security and minimizes administrative costs. By using virtualization software, hyperconverged appliances can provide centralized resources affordably without compromising performance.

- Kubernetes. Modern applications that are integrated across private, hybrid and public clouds can now be supported by HCI appliances. Organizations are using Kubernetes and HCI to speed up software updates, activate microservices, make stateless and stateful application deployment easier and move applications from on-premises to public cloud settings.

Benefits of hyperconverged appliances

Hyperconverged appliances unify traditional data center components and streamline operations, providing organizations with various benefits. Common benefits of hyperconverged appliances include the following:

- Modular design. Hyperconverged appliances adhere to a unique modular design, which makes them so well-suited to these particular use cases. Consider VDI, for example. In a VDI environment, virtual desktops tend to be identical to one another. An organization might have several categories of virtual desktops, such as one configuration for standard users and another configuration for power users, but the virtual desktops within a category tend to be identical to one another and, therefore, require identical hardware.

- Cost savings. An HCI appliance facilitates cost savings through the removal of distinct hardware components and the minimization of power, cooling and space demands. Also, their reliance on commodity hardware makes them an inexpensive alternative to conventional data center hardware and their simple integrated design makes them practical for use in locations with minimal to no on-site IT staff support.

- Easy scaling. Just as virtual desktops are identical to one another, so too are the nodes in a hyperconverged appliance. Because each node is identical, administrators can determine exactly how many virtual desktops each node can host. This makes it easy for an organization to determine how many nodes it needs to purchase to run the required number of virtual desktops. Likewise, an organization can scale its VDI deployment by adding nodes as needed.

- Enhanced data protection. Many HCI appliances have built-in data protection features, including backup, replication and data deduplication. These qualities provide strong DR options while protecting data consistency.

- Improved performance. HCI appliances can offer better performance and lower latency than traditional infrastructure, as they combine compute, storage and networking capabilities into a single device. This can result in faster data access and processing times.

- Backup and DR. Hyperconverged appliances are also becoming a popular choice for backup and DR. Hyperconverged appliances generally include large amounts of fault-tolerant storage, which makes the appliances ideal for use as a backup appliance. In addition, most hyperconverged systems include a hypervisor. This means that in a DR situation, an organization could conceivably run VMs directly from the backup appliance for the duration of the outage.

HCI appliance market and vendors

Numerous vendors offer hyperconverged infrastructure appliances, including the following:

- Cisco HyperFlex.

- Cohesity C6000 Series.

- Dell EMC VxRail.

- Hewlett Packard Enterprise SimpliVity.

- Microsoft Azure Stack HCI.

- NetApp HCI.

- Nutanix Acropolis.

- Pivot3 Acuity.

- StarWind HyperConverged Appliance.

- Scale Computing Platform.

- StorMagic SvSAN.

- VMware vSAN.

Most of these vendors bundle their own hardware with third-party hypervisors from vendors such as VMware or Microsoft. In addition, there are vendors, including VMware, that offer software organizations can use to create their own hyperconverged appliances.

Hyperconverged infrastructure simplifies and consolidates IT management. Explore the numerous benefits and challenges associated with hyperconverged deployments.