Storage

- Editor's letterPost-acquisition strategy and why storage mergers go bad

- Cover storyOptimal architectures for intelligent storage systems

- InfographicEnterprise backup as a service explained

- FeatureThe state of data center convergence: Past, present and future

- ColumnWith release of vSphere 7, VMware takes NVMe-oF mainstream

- ColumnLines blur between structured and unstructured data storage

jamesteohart/stock.adobe.com

The state of data center convergence: Past, present and future

Discover if CI, HCI, dHCI or composable technology is right for your environment and workloads, and how the converged data center continues to evolve.

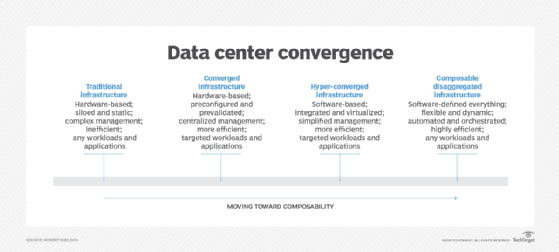

Data center convergence emerged to address the limitations of traditional infrastructure and storage, with the aim of finding ways to better integrate the discrete components that constitute IT infrastructure. The converged data center has evolved ever since.

The data center convergence movement started over a decade ago with the introduction of converged infrastructure (CI), which offered a preconfigured hardware-based offering that streamlined infrastructure deployment and maintenance.

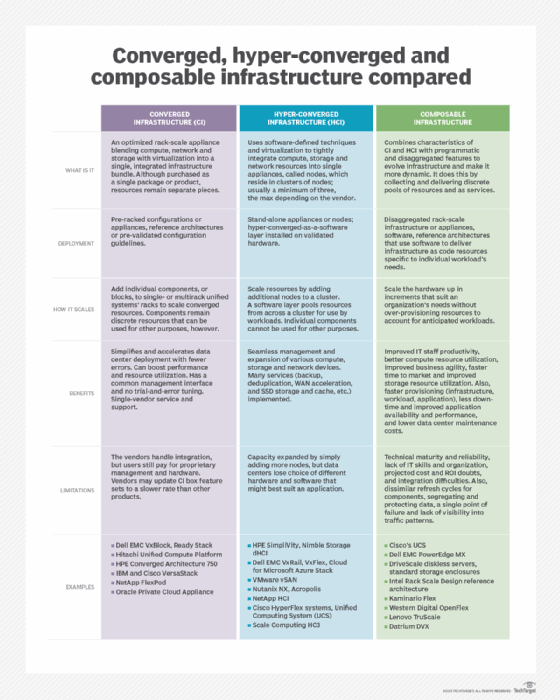

Hyper-converged infrastructure (HCI) built on this trend by providing a preconfigured software-based offering that simplified IT operations even further. This was followed by composable disaggregated infrastructure (CDI), which combined elements of both converged and hyper-converged infrastructure to deliver greater flexibility and integrated support for automation and orchestration.

These three infrastructures -- CI, HCI and CDI -- represent today's primary approaches to data center convergence. The lines between each type are sometimes blurred, however, with vendors taking liberties in how they define and categorize their products.

This has become even more pronounced with the trend toward hybrid clouds. Even so, the three kinds of converged infrastructures all share a common goal: addressing the challenges and inefficiencies of the traditional data center.

What is a converged data center?

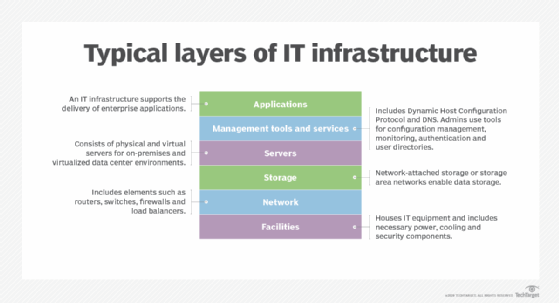

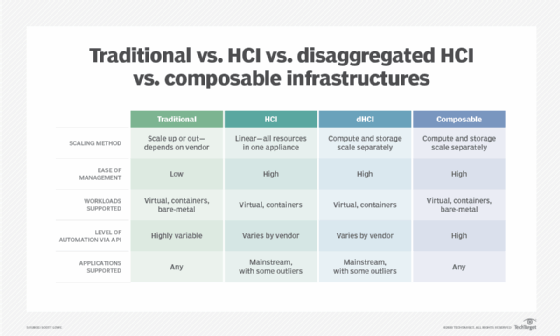

Traditional infrastructure is made up of standalone components that often come from multiple vendors and operate within their own silos. Application delivery is typically based on multi-tiered strategies, with equipment deployed and configured to support specific workloads. Although this approach provides a great deal of flexibility, it is costly and complex to maintain, and has ongoing compatibility and integration issues.

Even so, the traditional infrastructure met workload requirements well enough -- until the advent of modern applications, which were more complex, dynamic and resource-intensive. Not only did they require greater agility and scalability, but they could be geographically dispersed, making traditional architectures more cumbersome than ever.

Data center convergence addresses many of the limitations of traditional infrastructure and multi-tiered architectures, which are becoming increasingly difficult and expensive to maintain. Convergence can help streamline IT operations, better utilize data center resources, and lower the costs of deploying and managing infrastructure. Each converged system -- whether CI, HCI or CDI -- integrates compute, storage and network resources to a varying degree to meet the challenges of modern applications.

In a converged system, while every resource is essential to workload delivery, it is storage that drives the infrastructure, supporting the applications and their data. Converged storage helps address the limitations inherent in traditional systems such as NAS, SAN and DAS. These systems are fixed and difficult to change, or can be complex to deploy and manage, suffering many of the same drawbacks as other traditional infrastructure.

Data center convergence promises to deliver storage that's easier to deploy and manage, with CI, HCI and CDI each taking a different approach.

Converged infrastructure

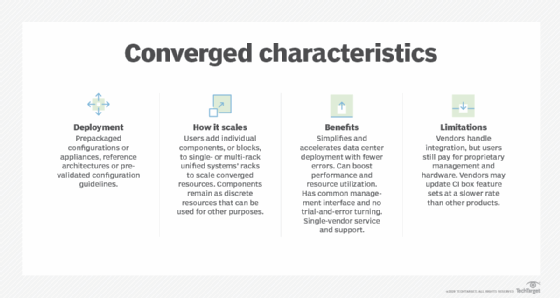

Converged infrastructure bundles server, storage and network resources into a turnkey rack-based appliance that addresses many of the compatibility and management challenges that come with traditional infrastructure. The appliance is highly engineered and optimized for specific workloads, helping to reduce deployment risks and better utilize resources.

A CI appliance can support HDDs, SSDs or a combination of both, with the ability to scale storage independently of other resources. Administrators manage infrastructure components through a centralized orchestration console.

With a CI appliance, organizations get a prequalified, preconfigured system that's easier to acquire, deploy and manage than traditional infrastructure. The infrastructure is implemented as a single standardized platform, with components prevalidated to work seamlessly with one another. This helps to increase data center efficiency, reduce overhead and lower costs. In addition, the centralized interface means less dependency on the management interfaces available to the individual components.

Unfortunately, converged infrastructure also shares some of the same limitations of traditional infrastructure. For example, it lacks the agility and dynamic capabilities required by many of today's applications. Because a CI appliance is a hardware- rather than software-based offering, it doesn't provide the flexibility and simplified management offered by software-defined infrastructure. A CI appliance can also experience interoperability issues with other data center systems and lead to vendor lock-in.

Even so, converged infrastructure continues to be a popular alternative to traditional infrastructure, with vendors offering more robust and feature-rich CI products than ever. For example, the Dell EMC PowerOne system provides a zero-midplane server infrastructure that can support thousands of nodes and multi-petabytes of storage while delivering operational analytics and monitoring. Cisco offers a line of powerful CI systems that includes Cisco Intersight, a lifecycle management platform that automates deployment and management operations.

Hyper-converged infrastructure

The next evolution of data center convergence comes in the form of HCI, which extends converged infrastructure by adding a software-defined layer that combines compute, storage and network components into pools of managed resources. HCI adds tighter integration between components and offers greater levels of abstraction and automation to further simplify deployment and management. Each node virtualizes the compute resources and provides a foundation for pooling storage across all nodes, eliminating the need for a physical SAN or NAS.

An HCI offering is made up of self-contained nodes that are preconfigured and optimized for specific workloads. This building-block structure, along with the abstracted physical components, makes it easier to deploy and scale resources than with traditional or converged infrastructure, resulting in faster operations, greater agility and lower complexity. Additionally, many HCI appliances include built-in data and disaster recovery protections.

At the same time, HCI comes with several challenges. For example, many HCI systems combine compute and storage resources in the same node. The only way to scale these systems is to add nodes in their entirety, which can result in overprovisioning either the compute or storage resources. In addition, HCI is typically preconfigured for specific workloads, limiting its flexibility to support specialized applications. Also, much like CI, it can be difficult to avoid vendor lock-in with HCI.

However, HCI has proven to be popular, especially as vendors continue to improve their systems and address HCI limitations. One example of this is disaggregated HCI (dHCI), which separates the physical compute and storage resources into individual nodes to provide more flexible scaling options. For instance, Hewlett Packard Enterprise offers its Nimble Storage dHCI product, which is built on HPE ProLiant servers and HPE Nimble storage. Because it's a dHCI offering, users can scale the servers and storage independently.

Hyper-converged vendors are also making improvements to help address the demands of mission-critical workloads that require high-performing systems. For example, Cisco's HyperFlex All NVMe systems support both Intel QLC 3D NAND NVMe SSDs and Intel Optane DC SSDs, which provide greater IOPS and lower latency. The Cisco HCI systems also offer the HyperFlex Acceleration Engine for offloading compression operations from the CPUs, helping to lower latency even further while improving VM density.

Composable disaggregated infrastructure

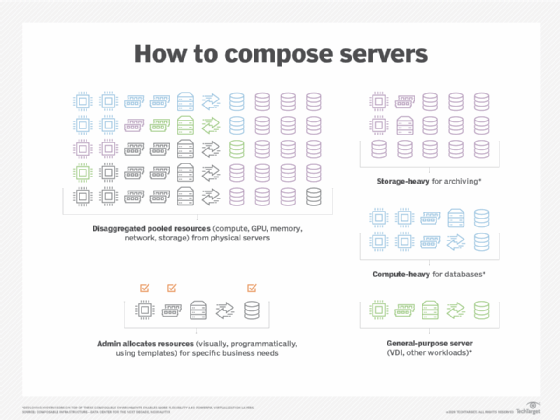

The next stage in the data center convergence evolution is composable disaggregated infrastructure, also referred to as composable infrastructure. CDI combines the best of CI and HCI, and then extends them to offer a completely software-driven environment. CDI abstracts the compute, storage and network resources and delivers them as services that can be dynamically composed and recomposed to meet changing application needs. The infrastructure also provides a comprehensive management API that administrators and developers can use to provision resources and orchestrate and automate operations.

Composability uses resources more efficiently than CI or HCI, and it can better accommodate diverse and changing workloads. For example, original CI and HCI systems could only run virtualized workloads. Many of the newer systems now support containers; however, only composable disaggregated infrastructure can run applications in VMs, containers and on bare metal. CDI is also better suited to accommodate modern applications. Its comprehensive API simplifies management and streamlines operations, eliminating much of the deployment and optimization overhead that comes with other infrastructures.

On the other hand, composable infrastructure is a young technology that lacks industry standards and even a common definition of what composability means. Composable vendors are on their own to build their CDI offerings, each following a unique set of rules. Not only does this raise the risk for vendor lock-in, but it can lead to interoperability issues among vendor products should an organization deploy composable disaggregated infrastructure products from multiple vendors.

In many ways, composability is still as much a vision as it is a reality, and it has a long way to go before achieving the type of software-defined ideal that drives the movement. Yet vendors are plowing forward and making important strides. For instance, HPE now offers both its Synergy and Composable Rack platforms, which provide software-defined composable systems that disaggregate components into logical resource pools to offer flexible deployment environments. HPE recently integrated its Primera storage platform into both CDI systems, fitting them with all-flash storage arrays that target mission-critical enterprise workloads.

Choosing a converged product

Before deciding on a converged system, IT should determine what applications and workloads it needs to support and their requirements for performance, data storage, data protection and future scaling.

Converged infrastructure appliances typically target larger enterprises and are configured for specific applications or workloads. Initially, hyper-converged infrastructure focused more on small to midsize organizations looking to implement VDI, but this has been changing with organizations of all sizes turning to HCI to support a variety of workloads.

Some organizations use CI and HCI for many of the same reasons, including server virtualization, database systems, business applications, and test and development. Even so, HCI is better suited for edge environments, smaller organizations with limited IT resources, and companies looking to simplify complex scenarios such as VDI or mixed applications.

Organizations that support modern workloads, use DevOps methodologies or require a high degree of application flexibility will likely turn to composable infrastructure, especially if they run applications on bare metal. CDI's automation capabilities can also help IT teams streamline their operations, while the API enables them to integrate with third-party management tools. Composable disaggregated infrastructure can benefit workloads such as AI and machine learning that might require dynamic resource allocation to support multiple processing operations and data fluctuations as well.

Enterprises are not required to pick one type of infrastructure to meet all workload requirements either. They might, for example, implement CI systems in their data centers and deploy HCI offerings to edge locations because those incur less administrative overhead and can be managed remotely. IT teams might even stick with traditional infrastructure in some cases to maintain complete control over their systems at the most granular level possible.

When it comes time to acquire a data center convergence system, businesses have several options, depending on the infrastructure type. They can purchase or lease an appliance that comes ready to deploy or acquire one through a program such as HPE GreenLake, which delivers IaaS much like the cloud.

Another option is the DIY approach, in which the IT department purchases the necessary software and hardware, and assembles the system themselves -- perhaps following a reference architecture that specifies which components to use and how to configure them.

What's ahead for data center convergence?

The complexities of today's modern workloads will no doubt continue to drive vendors to produce converged data center systems that offer greater capabilities, increase flexibility, and simplify deployments and management. At the same time, edge deployments will likely continue to grow, increasing HCI popularity even more. Another important trend is infrastructure that also fills the role of the hybrid cloud, combining the qualities of a converged system with the service-oriented features of the cloud.

Automation will also become a more important component of converged systems, making it easier for users to deploy workloads and manage their environments. Along with automation will come greater intelligence that incorporates AI, machine learning and other advanced technologies to better utilize resources, improve performance, and identify and address potential issues before they become serious. In conjunction with the growing intelligence will come sophisticated methods to proactively address security and compliance issues before they cause irreparable damage.

A potential disrupter on the horizon is the infiltration of cloud hosting companies into data centers. For example, AWS Outposts provides a fully managed service that extends AWS infrastructure, services, tools and APIs into on-premises environments. It might take a few years before the movement's impact is felt, especially if organizations are reluctant to give into the cloud giants even further. But with the increasing emphasis on the hybrid cloud, organizations will continue to look for the cheapest, most effective ways to accommodate their applications. These cloud-like services could be too tempting to resist.

Will modern hybrid clouds oust the converged system?

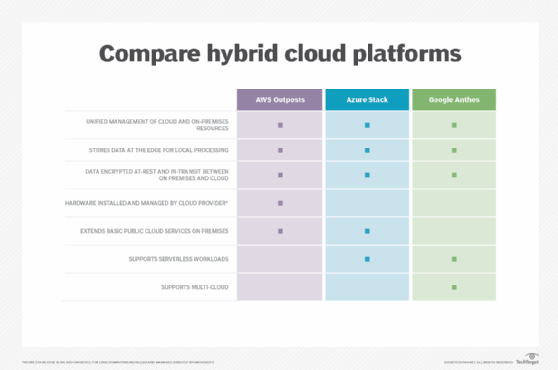

AWS Outposts extends the Amazon Web Services infrastructure into on-premises locations, including data centers, colocation facilities and edge environments. Amazon provides a hardware infrastructure along with AWS services, tools and APIs to deliver a hybrid offering that's fully integrated with the AWS cloud platform.

AWS delivers the infrastructure, sets it up, and continues to manage and support it by monitoring, patching and updating components. In this way, a customer can focus entirely on building and running its apps using native AWS services.

Not to be outdone, Microsoft has come out with Azure Stack and Google has implemented Google Cloud Anthos, which are similar services but with a few distinctions. For example, Azure Stack supports serverless workloads, and Anthos supports multi-cloud scenarios; AWS Outposts offers neither of these. Azure Stack and Anthos also have different hardware requirements and service models.

Despite their differences, the principle behind all three services is the same: to extend their cloud platforms into customers' on-premises spaces.

With such a program, organizations get a cloud-like service that eliminates many of the headaches that come with other infrastructure products, all while gaining a hybrid cloud environment that's ready to go. There are limitations to this environment, of course, with vendor lock-in taken to new heights. For many, however, the simplicity and ease with which they can get started makes such a model very enticing.

The benefits on-premises hybrid cloud services offer sound a lot like converged systems such as HCI, especially those offered through consumption-based programs like HPE GreenLake. In fact, many vendors now offer consumption-based programs and many of them include converged systems, and both the programs and systems are often described as the future of on-premises infrastructure.

With two such indomitable forces -- hardware vendors and cloud providers -- claiming on-premises space for their infrastructure wares, it begs the question of where the converged system will stand once the dust has settled. It's conceivable that they will coexist side by side, pushing traditional infrastructure out altogether while providing more options to meet the demands of tomorrow's workloads.

No doubt, many organizations will resist ceding more territory to the cloud space. On the other hand, customers already invested in a cloud platform might appreciate the uniformity and consistency that such a service provides.

Infrastructure technologies are, if nothing else, dynamic and fluid -- and they're evolving at an unprecedented rate. Five years from now, data center products could look nothing like what we have today, and terms such as converged, hyper-converged and composability might have little meaning. What will remain is the desire for infrastructure that is simple to deploy and provision, and can accommodate the applications of the future.

Whoever can make that happen is sure to come out on top.

Despite the ongoing march of cloud providers, converged systems will likely be around for some time to come, and they'll only get more agile, powerful and compact. Meanwhile, the lines will continue to blur between systems, as demonstrated by dHCI and its growing support for intelligence and automation.

In all this, however, there might still be a place for traditional infrastructure -- although, it's likely to play a smaller role as workloads grow more dynamic and IT budgets continue to shrink. On the other hand, this makes room for even more innovative technology in the future.